tl;dr; Not very slow.

At work, we have some very large .json that get included in a Docker image. The Node server then opens these files at runtime and displays certain data from that. To make the Docker image not too large, we compress these .json files at build-time. We compress the .json files with Brotli to make a .json.br file. Then, in the Node server code, we read them in and decompress them at runtime. It looks something like this:

export function readCompressedJsonFile(xpath) {

return JSON.parse(brotliDecompressSync(fs.readFileSync(xpath)))

}

The advantage of compressing them first, at build time, which is GitHub Actions, is that the Docker image becomes smaller which is advantageous when shipping that image to a registry and asking Azure App Service to deploy it. But I was wondering, is this a smart trade-off? In a sense, why compromise on runtime (which faces users) to save time and resources at build-time, which is mostly done away from the eyes of users? The question was; how much overhead is it to have to decompress the files after its data has been read from disk to memory?

The benchmark

The files I test with are as follows:

❯ ls -lh pageinfo*

-rw-r--r-- 1 peterbe staff 2.5M Jan 19 08:48 pageinfo-en-ja-es.json

-rw-r--r-- 1 peterbe staff 293K Jan 19 08:48 pageinfo-en-ja-es.json.br

-rw-r--r-- 1 peterbe staff 805K Jan 19 08:48 pageinfo-en.json

-rw-r--r-- 1 peterbe staff 100K Jan 19 08:48 pageinfo-en.json.br

There are 2 groups:

- Only English (

en)

- 3 times larger because it has English, Japanese, and Spanish

And for each file, you can see the effect of having compressed them with Brotli.

- The smaller JSON file compresses 8x

- The larger JSON file compresses 9x

Here's the benchmark code:

import fs from "fs";

import { brotliDecompressSync } from "zlib";

import { Bench } from "tinybench";

const JSON_FILE = "pageinfo-en.json";

const BROTLI_JSON_FILE = "pageinfo-en.json.br";

const LARGE_JSON_FILE = "pageinfo-en-ja-es.json";

const BROTLI_LARGE_JSON_FILE = "pageinfo-en-ja-es.json.br";

function f1() {

const data = fs.readFileSync(JSON_FILE, "utf8");

return Object.keys(JSON.parse(data)).length;

}

function f2() {

const data = brotliDecompressSync(fs.readFileSync(BROTLI_JSON_FILE));

return Object.keys(JSON.parse(data)).length;

}

function f3() {

const data = fs.readFileSync(LARGE_JSON_FILE, "utf8");

return Object.keys(JSON.parse(data)).length;

}

function f4() {

const data = brotliDecompressSync(fs.readFileSync(BROTLI_LARGE_JSON_FILE));

return Object.keys(JSON.parse(data)).length;

}

console.assert(f1() === 2633);

console.assert(f2() === 2633);

console.assert(f3() === 7767);

console.assert(f4() === 7767);

const bench = new Bench({ time: 100 });

bench.add("f1", f1).add("f2", f2).add("f3", f3).add("f4", f4);

await bench.warmup();

await bench.run();

console.table(bench.table());

Here's the output from tinybench:

┌─────────┬───────────┬─────────┬────────────────────┬──────────┬─────────┐

│ (index) │ Task Name │ ops/sec │ Average Time (ns) │ Margin │ Samples │

├─────────┼───────────┼─────────┼────────────────────┼──────────┼─────────┤

│ 0 │ 'f1' │ '179' │ 5563384.55941942 │ '±6.23%' │ 18 │

│ 1 │ 'f2' │ '150' │ 6627033.621072769 │ '±7.56%' │ 16 │

│ 2 │ 'f3' │ '50' │ 19906517.219543457 │ '±3.61%' │ 10 │

│ 3 │ 'f4' │ '44' │ 22339166.87965393 │ '±3.43%' │ 10 │

└─────────┴───────────┴─────────┴────────────────────┴──────────┴─────────┘

Note, this benchmark is done on my 2019 Intel MacBook Pro. This disk is not what we get from the Apline Docker image (running inside Azure App Service). To test that would be a different story. But, at least we can test it in Docker locally.

I created a Dockerfile that contains...

ARG NODE_VERSION=20.10.0

FROM node:${NODE_VERSION}-alpine

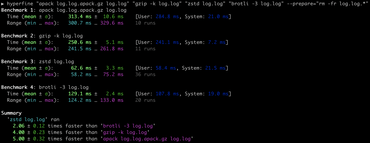

and run the same benchmark in there by running docker composite up --build. The results are:

┌─────────┬───────────┬─────────┬────────────────────┬──────────┬─────────┐

│ (index) │ Task Name │ ops/sec │ Average Time (ns) │ Margin │ Samples │

├─────────┼───────────┼─────────┼────────────────────┼──────────┼─────────┤

│ 0 │ 'f1' │ '151' │ 6602581.124978315 │ '±1.98%' │ 16 │

│ 1 │ 'f2' │ '112' │ 8890548.4166656 │ '±7.42%' │ 12 │

│ 2 │ 'f3' │ '44' │ 22561206.40002191 │ '±1.95%' │ 10 │

│ 3 │ 'f4' │ '37' │ 26979896.599974018 │ '±1.07%' │ 10 │

└─────────┴───────────┴─────────┴────────────────────┴──────────┴─────────┘

Analysis/Conclusion

First, focussing on the smaller file: Processing the .json is 25% faster than the .json.br file

Then, the larger file: Processing the .json is 16% faster than the .json.br file

So that's what we're paying for a smaller Docker image. Depending on the size of the .json file, your app runs ~20% slower at this operation. But remember, as a file on disk (in the Docker image), it's ~8x smaller.

I think, in conclusion: It's a small price to pay. It's worth doing. Your context depends.

Keep in mind the numbers there to process that 300KB pageinfo-en-ja-es.json.br file, it was able to do that 37 times in one second. That means it took 27 milliseconds to process that file!

The caveats

To repeat, what was mentioned above: This was run in my Intel MacBook Pro. It's likely to behave differently in a real Docker image running inside Azure.

The thing that I wonder the most about is arguably something that actually doesn't matter. 🙃

When you ask it to read in a .json.br file, there's less data to ask from the disk into memory. That's a win. You lose on CPU work but gain on disk I/O. But only the end net result matters so in a sense that's just an "implementation detail".

Admittedly, I don't know if the macOS or the Linux kernel does things with caching the layer between the physical disk and RAM for these files. The benchmark effectively asks "Hey, hard disk, please send me a file called ..." and this could be cached in some layer beyond my knowledge/comprehension. In a real production server, this only happens once because once the whole file is read, decompressed, and parsed, it won't be asked for again. Like, ever. But in a benchmark, perhaps the very first ask of the file is slower and all the other runs are unrealistically faster.

Feel free to clone https://github.com/peterbe/reading-json-files and mess around to run your own tests. Perhaps see what effect async can have. Or perhaps try it with Bun and it's file system API.