At work, we use Brotli (using the Node builtin zlib) to compress these large .json files to .json.br files. When using zlib.brotliCompress you can set options to override the quality number. Here's an example of it at quality 6:

import { promisify } from 'util'

import zlib from 'zlib'

const brotliCompress = promisify(zlib.brotliCompress)

const options = {

params: {

[zlib.constants.BROTLI_PARAM_MODE]: zlib.constants.BROTLI_MODE_TEXT,

[zlib.constants.BROTLI_PARAM_QUALITY]: 6,

},

}

export async function compress(data) {

return brotliCompress(data, options)

}

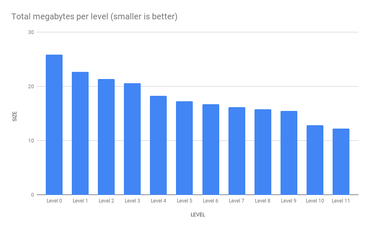

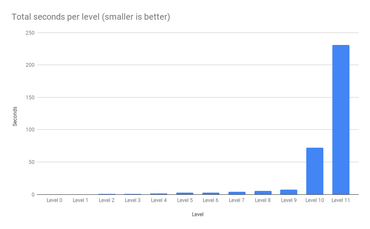

But what if you mess with that number. Surely, the files will become smaller, but at what cost? Well, I wrote a Node script that measured how long it would take to compress 6 large (~25MB each) .json file synchronously. Then, I put them into a Google spreadsheet and voila:

Size

Time

Miles away from rocket science but I thought it was cute to visualize as a way of understanding the quality option.

Comments

That's super interesting! So if time and compute is not a limiting issue one can get quite some smaler files!

I wonder if this this impacts decompression in any way? 🤔

Could you make the results available as text as well?