When you use a web framework like Tornado, which is single threaded with an event loop (like nodejs familiar with that), and you need persistency (ie. a database) there is one important questions you need to ask yourself:

Is the query fast enough that I don't need to do it asynchronously?

If it's going to be a really fast query (for example, selecting a small recordset by key (which is indexed)) it'll be quicker to just do it in a blocking fashion. It means less CPU work to jump between the events.

However, if the query is going to be potentially slow (like a complex and data intensive report) it's better to execute the query asynchronously, do something else and continue once the database gets back a result. If you don't all other requests to your web server might time out.

Another important question whenever you work with a database is:

Would it be a disaster if you intend to store something that ends up not getting stored on disk?

This question is related to the D in ACID and doesn't have anything specific to do with Tornado. However, the reason you're using Tornado is probably because it's much more performant that more convenient alternatives like Django. So, if performance is so important, is durable writes important too?

Let's cut to the chase... I wanted to see how different databases perform when integrating them in Tornado. But let's not just look at different databases, let's also evaluate different ways of using them; either blocking or non-blocking.

What the benchmark does is:

- On one single Python process...

- For each database engine...

- Create X records of something containing a string, a datetime, a list and a floating point number...

- Edit each of these records which will require a fetch and an update...

- Delete each of these records...

I can vary the number of records ("X") and sum the total wall clock time it takes for each database engine to complete all of these tasks. That way you get an insert, a select, an update and a delete. Realistically, it's likely you'll get a lot more selects than any of the other operations.

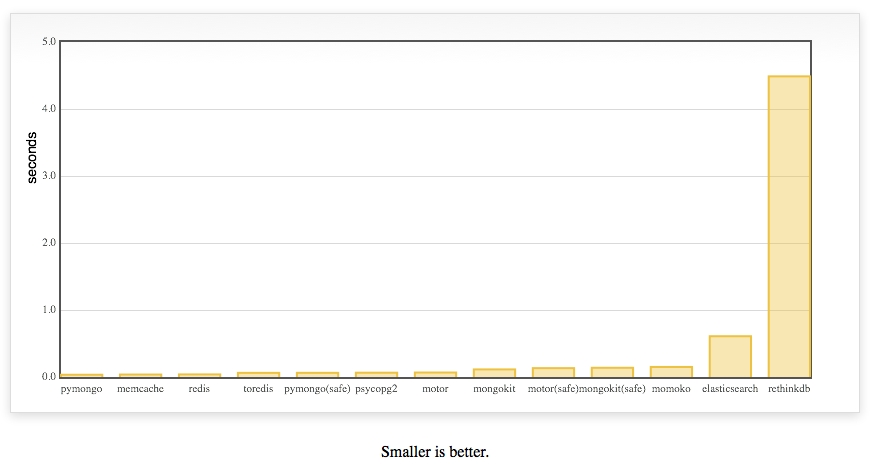

And the winner is:

pymongo!! Using the blocking version without doing safe writes.

Let me explain some of those engines

- pymongo is the blocking pure python engine

- with the redis, toredis and memcache a document ID is generated with

uuid4, converted to JSON and stored as a key - toredis is a redis wrapper for Tornado

- when it says

(safe)on the engine it means to tell MongoDB to not respond until it has with some confidence written the data - motor is an asynchronous MongoDB driver specifically for Tornado

- MySQL doesn't support arrays (unlike PostgreSQL) so instead the

tagsfield is stored astextand transformed back and fro as JSON - None of these database have been tuned for performance. They're all fresh out-of-the-box installs on OSX with homebrew

- None of these database have indexes apart from ElasticSearch where all things are indexes

- momoko is an awesome wrapper for psycopg2 which works asyncronously specifically with Tornado

- memcache is not persistant but I wanted to include it as a reference

- All JSON encoding and decoding is done using ultrajson which should work to memcache, redis, toredis and mysql's advantage.

- mongokit is a thin wrapper on pymongo that makes it feel more like an ORM

- A lot of these can be optimized by doing bulk operations but I don't think that's fair

- I don't yet have a way of measuring memory usage for each driver+engine but that's not really what this blog post is about

- I'd love to do more work on running these benchmarks on concurrent hits to the server. However, with blocking drivers what would happen is that each request (other than the first one) would have to sit there and wait so the user experience would be poor but it wouldn't be any faster in total time.

- I use the official elasticsearch driver but am curious to also add Tornado-es some day which will do asynchronous HTTP calls over to ES.

You can run the benchmark yourself

The code is here on github. The following steps should work:

$ virtualenv fastestdb $ source fastestdb/bin/activate $ git clone https://github.com/peterbe/fastestdb.git $ cd fastestdb $ pip install -r requirements.txt $ python tornado_app.py

Then fire up http://localhost:8000/benchmark?how_many=10 and see if you can get it running.

Note: You might need to mess around with some of the hardcoded connection details in the file tornado_app.py.

Discussion

Before the lynch mob of HackerNews kill me for saying something positive about MongoDB; I'm perfectly aware of the discussions about large datasets and the complexities of managing them. Any flametroll comments about "web scale" will be deleted.

I think MongoDB does a really good job here. It's faster than Redis and Memcache but unlike those key-value stores, with MongoDB you can, if you need to, do actual queries (e.g. select all talks where the duration is greater than 0.5). MongoDB does its serialization between python and the database using a binary wrapper called BSON but mind you, the Redis and Memcache drivers also go to use a binary JSON encoding/decoder.

The conclusion is; be aware what you want to do with your data and what and where performance versus durability matters.

What's next

Some of those drivers will work on PyPy which I'm looking forward to testing. It should work with cffi like psycopg2cffi for example for PostgreSQL.

Also, an asynchronous version of elasticsearch should be interesting.

UPDATE 1

Today I installed RethinkDB 2.0 and included it in the test.

It was added in this commit and improved in this one.

I've been talking to the core team at RethinkDB to try to fix this.