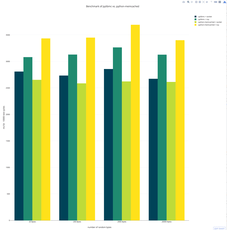

I did another couple of benchmarks of different cache backends in Django. This is an extension/update on Fastest cache backend possible for Django published a couple of months ago. This benchmarking isn't as elaborate as the last one. Fewer tests and fewer variables.

I have another app where I use a lot of caching. This web application will run its cache server on the same virtual machine. So no separation of cache server and web head(s). Just one Django server talking to localhost:11211 (memcached's default port) and localhost:6379 (Redis's default port).

Also in this benchmark, the keys were slightly smaller. To simulate my applications "realistic needs" I made the benchmark fall on roughly 80% cache hits and 20% cache misses. The cache keys were 1 to 3 characters long and the cache values lists of strings always 30 items long (e.g. len(['abc', 'def', 'cba', ... , 'cab']) == 30).

Also, in this benchmark I was too lazy to test all different parsers, serializers and compressors that django-redis supports. I only test python-memcached==1.58 versus django-redis==4.8.0 versus django-redis==4.8.0 && msgpack-python==0.4.8.

The results are quite "boring". There's basically not enough difference to matter.

| Config | Average | Median | Compared to fastest |

|---|---|---|---|

| memcache | 4.51s | 3.90s | 100% |

| redis | 5.41s | 4.61s | 84.7% |

| redis_msgpack | 5.16s | 4.40s | 88.8% |

UPDATE

As Hal pointed out in the comment, when you know the web server and the memcached server is on the same computer you should use UNIX sockets. They're "obviously" faster since the lack of HTTP overhead at the cost of it doesn't work over a network.

Because running memcached on a socket on OSX is a hassle I only have one benchmark. Note! This basically compares good old django.core.cache.backends.memcached.MemcachedCache with two different locations.

| Config | Average | Median | Compared to fastest |

|---|---|---|---|

| 127.0.0.1:11211 | 3.33s | 3.34s | 81.3% |

| unix:/tmp/memcached.sock | 2.66s | 2.71s | 100% |

But there's more! Another option is to use pylibmc which is a Python client written in C. By the way, my Python I use for these microbenchmarks is Python 3.5.

Unfortunately I'm too lazy/too busy to do a matrix comparison of pylibmc on TCP versus UNIX socket. Here are the comparison results of using python-memcached versus pylibmc:

| Client | Average | Median | Compared to fastest |

|---|---|---|---|

| python-memcached | 3.52s | 3.52s | 62.9% |

| pylibmc | 2.31s | 2.22s | 100% |

UPDATE 2

Seems my luck someone else has done the matrix comparison of python-memcached vs pylibmc on TCP vs UNIX socket:

Comments

Post your own commentYou forgot the most important thing: how fast is it without any cache server? Or perhaps caching is not worth using?

That obviously depends on a zillion things.

But yes, for some things you can use thread safe global containers in memory of you don't mind repeating the memory for every gunicorn/uwsgi process.

Or locmem.

Lonnen, like any global container means the memory has to be "duplicated" per process. With 4 CPUs you might have 9 separate processes and thus 9x the amount of memory usage on the whole server.

But shouldn't locmem be considered in the case of a small relatively-static dataset being accessed: such as configurations or something similar? supposing the entire dataset to be cached is 1MB and you're running 9 process, then that's an overhead of 9MB...wouldn't local memory access be more performant than a remote service access? This may be an edge case but could lead to serious performance implications in different scenarios: for example if you're looking up 10 different configurations for each request..

Not only are you storing the same piece of data 9 times you also have possibly 8 cache misses.

Definitely more performant than a remote service but a cache server on localhost is just as performant.

You can redo the test using socket connections ;)

If you can cache locally - sockets can be up to 30% faster than TCP/IP based comms.

I'll try. Now where did I put that benchmark code?

See UPDATE above.

Did you try installing `hiredis`? `redis-py` uses it when parsing if available.

Try installing `hiredis` together with `redis-py`, it uses it when parsin if available.