TextBlob is a wonderful Python library it. It wraps nltk with a really pleasant API. Out of the box, you get a spell-corrector. From the tutorial:

>>> from textblob import TextBlob

>>> b = TextBlob("I havv goood speling!")

>>> str(b.correct())

'I have good spelling!'

The way it works is that, shipped with the library, is this text file: en-spelling.txt It's about 30,000 lines long and looks like this:

;;; Based on several public domain books from Project Gutenberg

;;; and frequency lists from Wiktionary and the British National Corpus.

;;; http://norvig.com/big.txt

;;;

a 21155

aah 1

aaron 5

ab 2

aback 3

abacus 1

abandon 32

abandoned 72

abandoning 27

That gave me an idea! How about I use the TextBlob API but bring my own text as the training model. It doesn't have to be all that complicated.

The challenge

(Note: All the code I used for this demo is available here: github.com/peterbe/spellthese)

I found this site that lists "Top 1,000 Baby Boy Names". From that list, randomly pick a couple of out and mess with their spelling. Like, remove letters, add letters, and swap letters.

So, 5 random names now look like this:

▶ python challenge.py

RIGHT: jameson TYPOED: jamesone

RIGHT: abel TYPOED: aabel

RIGHT: wesley TYPOED: welsey

RIGHT: thomas TYPOED: thhomas

RIGHT: bryson TYPOED: brysn

Imagine some application, where fat-fingered users typo those names on the right-hand side, and your job is to map that back to the correct spelling.

First, let's use the built in TextBlob.correct. A bit simplified but it looks like this:

from textblob import TextBlob

correct, typo = get_random_name()

b = TextBlob(typo)

result = str(b.correct())

right = correct == result

...

And the results:

▶ python test.py

ORIGIN TYPO RESULT WORKED?

jesus jess less Fail

austin ausin austin Yes!

julian juluian julian Yes!

carter crarter charter Fail

emmett emett met Fail

daniel daiel daniel Yes!

luca lua la Fail

anthony anthonyh anthony Yes!

damian daiman cabman Fail

kevin keevin keeping Fail

Right 40.0% of the time

Buuh! Not very impressive. So what went wrong there? Well, the word met is much more common than emmett and the same goes for words like less, charter, keeping etc. You know, because English.

The solution

The solution is actually really simple. You just crack open the classes out of textblob like this:

from textblob import TextBlob

from textblob.en import Spelling

path = "spelling-model.txt"

spelling = Spelling(path=path)

spelling.train(" ".join(names), path)

Now, instead of corrected = str(TextBlob(typo).correct()) we do result = spelling.suggest(typo)[0][0] as demonstrated here:

correct, typo = get_random_name()

b = spelling.suggest(typo)

result = b[0][0]

right = correct == result

...

So, let's compare the two "side by side" and see how this works out. Here's the output of running with 20 randomly selected names:

▶ python test.py

UNTRAINED...

ORIGIN TYPO RESULT WORKED?

juan jaun juan Yes!

ethan etha the Fail

bryson brysn bryan Fail

hudson hudsn hudson Yes!

oliver roliver oliver Yes!

ryan rnyan ran Fail

cameron caeron carron Fail

christopher hristopher christopher Yes!

elias leias elias Yes!

xavier xvaier xvaier Fail

justin justi just Fail

leo lo lo Fail

adrian adian adrian Yes!

jonah ojnah noah Fail

calvin cavlin calvin Yes!

jose joe joe Fail

carter arter after Fail

braxton brxton brixton Fail

owen wen wen Fail

thomas thoms thomas Yes!

Right 40.0% of the time

TRAINED...

ORIGIN TYPO RESULT WORKED?

landon landlon landon Yes

sebastian sebstian sebastian Yes

evan ean ian Fail

isaac isaca isaac Yes

matthew matthtew matthew Yes

waylon ywaylon waylon Yes

sebastian sebastina sebastian Yes

adrian darian damian Fail

david dvaid david Yes

calvin calivn calvin Yes

jose ojse jose Yes

carlos arlos carlos Yes

wyatt wyatta wyatt Yes

joshua jsohua joshua Yes

anthony antohny anthony Yes

christian chrisian christian Yes

tristan tristain tristan Yes

theodore therodore theodore Yes

christopher christophr christopher Yes

joshua oshua joshua Yes

Right 90.0% of the time

See, with very little effort you can got from 40% correct to 90% correct.

Note, that the output of something like spelling.suggest('darian') is actually a list like this: [('damian', 0.5), ('adrian', 0.5)] and you can use that in your application. For example:

<li><a href="?name=damian">Did you mean <b>damian</b></a></li>

<li><a href="?name=adrian">Did you mean <b>adrian</b></a></li>

Bonus and conclusion

Ultimately, what TextBlob does is a re-implementation of Peter Norvig's original implementation from 2007. I too, have written my own implementation in 2007. Depending on your needs, you can just figure out the licensing of that source code and lift it out and implement in your custom ways. But TextBlob wraps it up nicely for you.

When you use the textblob.en.Spelling class you have some choices. First, like I did in my demo:

path = "spelling-model.txt"

spelling = Spelling(path=path)

spelling.train(my_space_separated_text_blob, path)

What that does is creating a file spelling-model.txt that wasn't there before. It looks like this (in my demo):

▶ head spelling-model.txt

aaron 1

abel 1

adam 1

adrian 1

aiden 1

alexander 1

andrew 1

angel 1

anthony 1

asher 1

The number (on the right) there is the "frequency" of the word. But what if you have a "scoring" number of your own. Perhaps, in your application you just know that adrian is more right than damian. Then, you can make your own file:

Suppose the text file ("spelling-model-weighted.txt") contains lines like this:

...

adrian 8

damian 3

...

Now, the output becomes:

>>> import os

>>> from textblob.en import Spelling

>>> import os

>>> path = "spelling-model-weighted.txt"

>>> assert os.path.isfile(path)

>>> spelling = Spelling(path=path)

>>> spelling.suggest('darian')

[('adrian', 0.7272727272727273), ('damian', 0.2727272727272727)]

Based on the weighting, these numbers add up. I.e. 3 / (3 + 8) == 0.2727272727272727

I hope it inspires you to write your own spelling application using TextBlob.

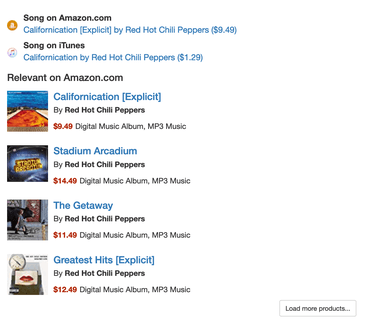

For example, you can feed it the names of your products on an e-commerce site. The .txt file might bloat if you have too much but note that the 30K lines en-spelling.txt is only 314KB and it loads in...:

>>> from textblob import TextBlob

>>> from time import perf_counter

>>> b = TextBlob("I havv goood speling!")

>>> t0 = perf_counter(); right = b.correct() ; t1 = perf_counter()

>>> t1 - t0

0.07055813199999861

...70ms for 30,000 words.