My personal blog was a regular Django website with jQuery (later switched to Cash) for dynamic bits. In December 2021 I rewrote it in NextJS. It was a fun journey and NextJS is great but it's really not without some regrets.

Some flashpoints for note and comparison:

React SSR is awesome

The way infinitely nested comments are rendered is isomorphic now. Before I had to code it once as a Jinja2 template thing and once as a Cash (a fork of jQuery) thing. That's the nice and the promise of JavaScript React and server-side rendering.

JS bloat

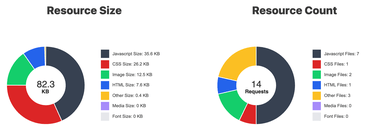

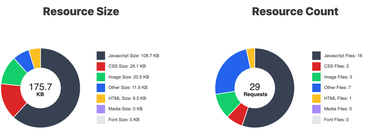

The total JS payload is now ~111KB in 16 files. It used to be ~36KB in 7 files. :(

Before

After

Data still comes from Django

Like any website, the web pages are made up from A) getting the raw data from a database, B) rendering that data in HTML.

I didn't want to rewrite all the database queries in Node (inside getServerSideProps).

What I did was I moved all the data gathering Django code and put them under a /api/v1/ prefix publishing simple JSON blobs. Then this is exposed on 127.0.0.1:3000 which the Node server fetches. And I wired up that that API endpoint so I can debug it via the web too. E.g. /api/v1/plog/sort-a-javascript-array-by-some-boolean-operation

Now, all I have to do is write some TypeScript interfaces that hopefully match the JSON that comes from Django. For example, here's the getServerSideProps code for getting the data to this page:

const url = `${API_BASE}/api/v1/plog/`;

const response = await fetch(url);

if (!response.ok) {

throw new Error(`${response.status} on ${url}`);

}

const data: ServerData = await response.json();

const { groups } = data;

return {

props: {

groups,

},

};

I like this pattern! Yes, there are overheads and Node could talk directly to PostgreSQL but the upside is decoupling. And with good outside caching, performance never matters.

Server + CDN > static site generation

I considered full-blown static generation, but it's not an option. My little blog only has about 1,400 blog posts but you can also filter by tags and combinations of tags and pagination of combinations of tags. E.g. /oc-JavaScript/oc-Python/p3 So the total number of pages is probably in the tens of thousands.

So, server-side rendering it is. To accomplish that I set up a very simple Express server. It proxies some stuff over to the Django server (e.g. /rss.xml) and then lets NextJS handle the rest.

import next from "next";

import express from "express";

const app = next();

const handle = app.getRequestHandler();

app

.prepare()

.then(() => {

const server = express();

server.use(handle);

server.listen(port, (err) => {

if (err) throw err;

console.log(`> Ready on http://localhost:${port}`);

});

})

Now, my site is behind a CDN. And technically, it's behind Nginx too where I do some proxy_pass in-memory caching as a second line of defense.

Requests come in like this:

- from user to CDN

- from CDN to Nginx

- from Nginx to Express (

proxy_pass) - from Express to

next().getRequestHandler()

And I set Cache-Control in res.setHeader("Cache-Control", "public,max-age=86400") from within the getServerSideProps functions in the src/pages/**/*.tsx files. And once that's set, the response will be cached both in Nginx and in the CDN.

Any caching is tricky when you need to do revalidation. Especially when you roll out a new central feature in the core bundle. But I quite like this pattern of a slow-rolling upgrade as individual pages eventually expire throughout the day.

This is a nasty bug with this and I don't yet know how to solve it. Client-side navigation is dependent of hashing. So loading this page, when done with client-side navigation, becomes /_next/data/2ps5rE-K6E39AoF4G6G-0/en/plog.json (no, I don't know how that hashed URL is determined). But if a new deployment happens, the new URL becomes /_next/data/UhK9ANa6t5p5oFg3LZ5dy/en/plog.json so you end up with a 404 because you started on a page based on an old JavaScript bundle, that is now invalid.

Thankfully, NextJS handles it quite gracefully by throwing an error on the 404 so it proceeds with a regular link redirect which takes you away from the old page.

Client-side navigation still sucks. Kinda.

Next has a built-in <Link> component that you use like this:

import Link from "next/link";

...

<Link href={"/plog/" + post.oid}>

{post.title}

</Link>

Now, clicking any of those links will automatically enable client-side routing. Thankfully, it takes care of preloading the necessary JavaScript (and CSS) simply by hovering over the link, so that when you eventually click it just needs to do an XHR request to get the JSON necessary to be able to render the page within the loaded app (and then do the pushState stuff to change the URL accordingly).

It sounds good in theory but it kinda sucks because unless you have a really good Internet connection (or could be you hit upon a CDN-cold URL), nothing happens when you click. This isn't NextJS's fault, but I wonder if it's actually horribly for users.

Yes, it sucks that a user clicks something but nothing happens. (I think it would be better if it was a button-press and not a link because buttons feel more like an app whereas links have deeply ingrained UX expectations). But most of the time, it's honestly very fast and when it works it's a nice experience. It's a great piece of functionality for more app'y sites, but less good for websites whose most of the traffic comes from direct links or Google searches.

NextJS has built-in critical CSS optimization

Critical inline CSS is critical (pun intended) for web performance. Especially on my poor site where I depend on a bloated (and now ancient) CSS framework called Semantic-UI. Without inline CSS, the minified CSS file would become over 200KB.

In NextJS, to enable inline critical CSS loading you just need to add this to your next.config.js:

experimental: { optimizeCss: true },

and you have to add critters to your package.json. I've found some bugs with it but nothing I can't work around.

Conclusion and what's next

I'm very familiar and experienced with React but NextJS is new to me. I've managed to miss it all these years. Until now. So there's still a lot to learn. With other frameworks, I've always been comfortable that I don't actually understand how Webpack and Babel work (internally) but at least I understood when and how I was calling/depending on it. Now, with NextJS there's a lot of abstracted magic that I don't quite understand. It's hard to let go of that. It's hard to get powerful tools that are complex and created by large groups of people and understand it all too. If you're desperate to understand exactly how something works, you inevitably have to scale back the amount of stuff you're leveraging. (Note, it might be different if it's absolute core to what you do for work and hack on for 8 hours a day)

The JavaScript bundles in NextJS lazy-load quite decently but it's definitely more bloat than it needs to be. It's up to me to fix it, partially, because much of the JS code on my site is for things that technically can wait such as the interactive commenting form and the auto-complete search.

But here's the rub; my site is not an app. Most traffic comes from people doing a Google search, clicking on my page, and then bugger off. It's quite static that way and who am I to assume that they'll stay and click around and reuse all that loaded JavaScript code.

With that said; I'm going to start an experiment to rewrite the site again in Remix.

Comments

Post your own commentHere's a Webpagetest comparison of the NextJS implementation compared to the old Jinja + Cash implementation:

https://webpagetest.org/video/compare.php?tests=211217_BiDcRT_329dbd7e3eff52289a96eb390c8620b1,211217_AiDcG4_a764a17f4a6fd82e29692daeaee3a336

I never understand the logic behind NextJS. The whole point of React and Angular was to put all the client logic on the client side, a clean seperation of concerns, away from the ugliness of JSP, PHP, ASP, etc. Now we are going back to the server again? This just hurts my brain.

Client side rendering = bad SEO or not working SEO at all. SSG or SSR fixes that.

Client-side rendering works in SEO. When Google indexes your site, they're using JavaScript just like any other browser.

Worry less about SEO and worry more about making the pages perfect for real users.

Server rendered pages are responding faster because they're not blocked by waiting for JS to download, parse, execute. That's why they feel faster. When they feel faster, it's better SEO.

No, please don't believe everything that Google says on its face value, it is a lesson learnt the hard way. The SEO foes down the trash with client side. The entire nextjs SSR / SSG / ISR evolved because Google was dumb and unable to execute javascripts in the first place.

You should check out Hugo https://blog.elijahlopez.ca/posts/hugo-tutorial/ . Often I find that people are using next js for the wrong types of websites. For example the tutorial by fireship just displays text with an image and no styling. What's wrong with a server + html then? And then there was a person in the comments section saying they wanted spa but it was tricky to do with nextjs but wanted the ssr as if nextjs is the only tool to get ssr. And last of all, jinja2 is great if you know what you're doing. I remember my website with flask started off with html files and jinja2 variables and it took a year to realize that jinja2 has the includes feature. I think the front end tutorials only exist for webdevs but never backend devs. That's what the developer industry is lacking; front end tools for backend devs and Hugo is a good start for at least static content.

NExtJS really shines out with edge hosting, consider vercel or cloudflare R2 for your blog and you'll feel the difference.

My site is hosted on the Edge already. A CDN :)

Because the content is static, only the first request, per URL, goes to the backend server (in US East) and the rest comes from the CDN pops.